Essential IT Admin Skills for Dotnet Developers

The lack of skilled IT workers is hurting the deployment of emerging technology, according to a new survey from Gartner. In areas from cloud to cybersecurity, this crisis is expected to last for years to come.

Editorial Note: Although this article uses Windows Server 2008 and SQL Server 2008 R2, many concepts are applicable for newer versions too. Please use your judgment while applying these suggestions for newer versions.

1. Designing a reliable production architecture

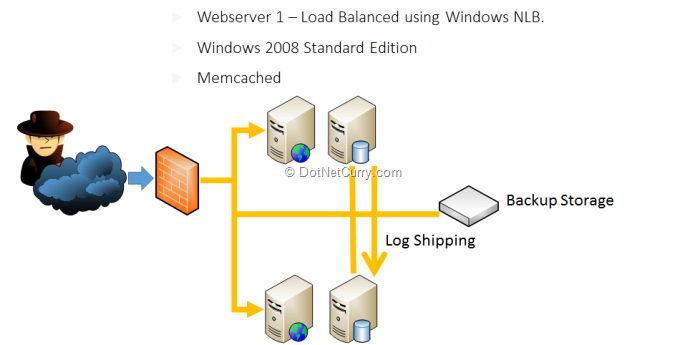

Say you are still a garage startup and money is your primary concern. You want to spend the least amount of money to buy hardware yet you want to have a decent reliability around your setup. So even if one server goes down, your website should be able to recover within minutes. Let’s look at the cheapest possible production architecture money can buy, that offers some decent reliability and survives a complete server failure.

Figure 1: Super cheap production architecture

Highlights from this architecture:

Both servers are Windows 2008 R2 web/standard editions to save cost.

Both servers have SQL Server Web Edition installed provided by hosting company. If you want to buy your own servers, then you have to purchase Standard Edition.

One server is called Primary Database Server that has the databases on it.

The other standby server has standby copies of the databases configured through SQL Server’s Log Shipping feature.

Both servers have IIS and web app is running on both.

Both servers have Windows Network Load Balancing configured to load balance the web traffic between both servers. NLB is configured on port 80 to distribute web traffic equally on both servers. Alternatively, you can ask your hosting provider to give you two load balanced ports on their firewall or load balancer.

Both servers have two disks and Windows Disk Mirroring is configured to provide a software RAID1 solution. This way both disks have the exact same data. Even if one disk fails, there will be no disruption.

This configuration gives you pretty decent reliability. If one server fails, the web traffic will be automatically diverted to the other server. But the SQL Server Standby databases have to be manually brought online and you need to change the web.config on the surviving server to point to the surviving SQL Server.

However, you should go with this super cheap configuration only if are a garage Startup and you are funding from your own pocket. If you want a decent reliability and performance, you need to go for the next architecture.

2. Decent production architecture for 99% availability

The first thing you need to do is separate out the databases into their own servers.

Figure 2: Decent Production Architecture

Whenever you put SQL Server on a server, you will see all your RAM gets consumed. It is designed to allocate all available memory. So, IIS and SQL Server will be fighting for RAM unless you go to the SQL Server Management Studio and fix the maximum memory it will consume. But you shouldn’t do that unless you are desperate. You should put SQL Server on its own server with as much RAM as possible. The best thing would be to have the same amount of RAM as the size of data in your database. But RAM is expensive.

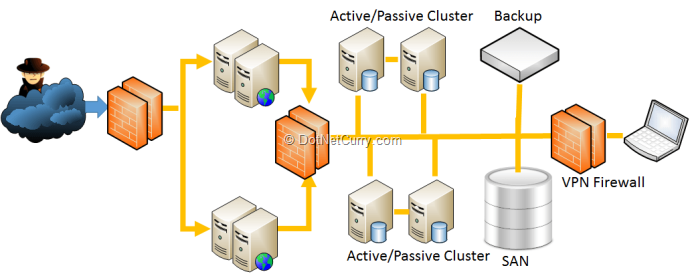

3. Super reliable hosting architecture for 99.99% availability

This is where it gets really expensive and really rock solid. If you have a business critical application that earns millions per year and have to be up and running 24x7, you need an architecture that looks like this:

Figure 3: Super reliable and expensive hosting architecture

There are enough redundancy at every level. First, there are redundant firewall. Yes, that’s the last thing you think about – having a standby firewall. But once we had this nightmare where our one and only firewall was down and entire website was down. We learnt the hard way to have passive firewall since then. Then you have enough web server available so that even if 2 of them are down, you will have no issues serving the traffic.

A common mistake IT guys make while designing production architecture is – they do capacity analysis to find out how many server they need and they add one server to it and buy that many servers. But when you have one server down, you might have to release a critical patch on your web app. During that time, you will have to take one server out at a time and patch them one after another. At that moment, you will have to serve traffic while both the servers are down. That’s why whenever you calculate how many servers you need to serve the traffic as per the projected volume, always ensure you can serve that traffic even if two servers are down.

The other important addition in this architecture is SAN – Storage Area Network. Hosting providers have their SAN, which is like a monstrous collection of disks controlled by super awesome disk controllers. Servers connect to SAN via fibre channel for lightning fast Disk I/O. You purchase volumes from SAN. For ex, you ask for 5x200 GB volumes from the SAN. Then you get those volumes available in your server and the server can read- write on those volumes. SAN is very expensive. Only databases and highly critical data are stored on SAN.

SAN offers the maximum reliability and performance. They are faster than local disks. SAN can offer you complete disk fail protection. Moreover, SAN offers on-the-fly increase of disk volumes. If your database is growing faster than you can handle and you are about to run out of disk space, you can increase the size of the volume on-the-fly.

4. Checklist for creating IIS websites

Here are couple of things we always do while creating a website on IIS:

- Create each website on its own pool, using App Pool identity. This creates a new user for each app pool. This way we can give granular permissions to certain App Pool users and do not have to meddle with NETWORK_SERVICE account.

- Create the App_Data folder and allow write access to NETWORK_SERVICE, IUSR and IIS APPPOOL\

. - Enable Static and Dynamic Compression.

- Turn on Content Expiration from IIS -> Website -> Http headers -> Common Headers. Set to 30 days. This makes all the static file cacheable on the browser. You will get significant reduction in web traffic when you turn this on.

- From IIS -> Website -> Bindings, we map both www.yourdomain.com and yourdomain.com. Alternatively we setup a redirector website to redirect traffic on yourdomain.com to www.yourdomain.com. The later is better because this way users are never browsing your website over yourdomain.com and they will always be on www.yourdomain.com. If your website is such that users copy and share links to pages frequently, you should go for this redirection.

- From IIS Logging, turn on “Bytes Sent” and “Bytes Received” fields. Change creating log files to hourly if you have heavy traffic. This way each log file size will be within manageable size and if you have to parse the log files, it won’t take too long. Whenever we have to diagnose slow pages, we first look into IIS log and ensure if a page is taking high time to execute, it is not because of large “Bytes Sent”.

- Map 404 to some dignified page from IIS -> Website -> Error Pages -> 404. You can set it to redirect to your websites homepage.

- Copy website code to each server and synchronize file last modification date and time. This way each file will have the exact same last modified date time on each server. That means if browser gets jquery.js file from webserver1, and it tries to hit webserver2 on another page visit and asks webserver2 about the last modified date time of jquery.js, it will get the exact same date time and it won’t download the whole file again.

- Create a static website hosted on a different domain. Eg. Staticyourdomain.com and have all static files served from this domain. It prevents large ASP.NET cookies from being sent over static files.

These are the techniques we have learnt over the years to avoid common mistakes and tune website to serve well cached traffic to the browser delivering faster page load performance.

5. Removing unwanted HTTP headers

There are some HTTP response headers that make hackers’ lives easier in order to detect what version of IIS you are running on and what .NET version you are on. You can remove most of those headers using web.config. But there are some that cannot be done and you need write a custom HttpModule to remove those from every response. Especially the ETag header that IIS 7 has made it impossible to remove via any configuration. Removing ETag is one of the best way to get better caching from browser for the static files on your website.

Here’s a HTTP Module that can remove the headers that you don’t need:

Before the HttpModule:

Cache-Control:private

Content-Length:445

Content-Type:text/html; charset=utf-8

Date:Sun, 31 Mar 2013 11:19:36 GMT

Server:Microsoft-IIS/8.0

Vary:Accept-Encoding

X-AspNet-Version:4.0.30319

After the HttpModule:

Cache-Control:private

Content-Length:445

Content-Type:text/html; charset=utf-8

Date:Sun, 31 Mar 2013 11:16:03 GMT

Vary:Accept-Encoding

6. Synchronizing File Date Time across multiple servers

When you deploy the same website on multiple webservers, you end up having each file getting different last modified date. As a result, each IIS produces different ETag for the static files. If user is hitting different servers for the same file (due to load balancing), each IIS is responding with different ETag and thus browser downloading the same file over and over again. If you have 3 servers, same user has most likely downloaded the same file thrice. This gives poor page load performance.

Moreover, if you want to mirror two or more locations using one location as a base, not only you need to copy the same files but you need to set the same Create Date and Last Modified Date on the files. Otherwise they aren’t true mirror. There are various use cases where you need a complete mirror not only at file content level but also at file date time level.

Here’s a powershell script that will do the job for you:

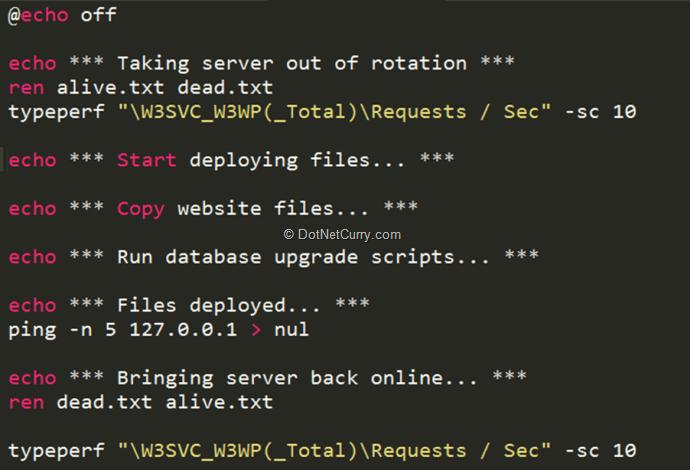

7. Automate Production deployment with scripts

When you have multiple webservers running your website, it becomes difficult and time consuming to deploy code on each server manually. You have to take one server out of load balancer at a time, deploy the new code, warm it up, and put it back on to the load balancer. Then take another server out and keep repeating the steps until all the webservers have the latest code. There are always chances of human error screwing up one server, which becomes very difficult to figure out once the site is up and running and you hear users reporting random problems.

Here’s a scripted approach we take to deploy code on production:

Figure 4: Deploy code on production via script

This is not a complete script for deploying sites on production but a concept. I want to show you the ideas behind such automation. You need to add the real code to deploy your website’s codebase as it suits you.

First, there’s a file on the website called alive.txt. We configure our load balancer to hit this file every second to make sure the webserver is alive. If Load Balancer gets a HTTP 200 then it assumes the website is up and running. But if it gets some other response then it assumes the site is down and it takes the webserver out of the rotation. It stops sending any more traffic to it until it gets the alive.txt response.

So during deployment we rename this file so that load balancer stops sending traffic to this webserver. Then we give it some time to drain out the existing traffic. When the server has finished running all the in-flight requests, we see Requests/Sec showing as 0 from the typeperf command. Then the code deployment starts. There are various ways to do it. You can have the production binaries stored in a network share and do xcopy from that share into the server. Or you can have network binaries stored in subversion and download the binaries from subversion.

Then the script looks for .sql files in a specific folder and it runs them in proper order. We name the .sql files as 001, 002, 003 so that the exact sequence is maintained. The script connects to SQL Server using osql and executes the sql scripts one by one.

Once the deployment is over, it renames the dead.txt back to alive.txt. Then load balancer detects the file is available again and it puts the webserver back into rotation.

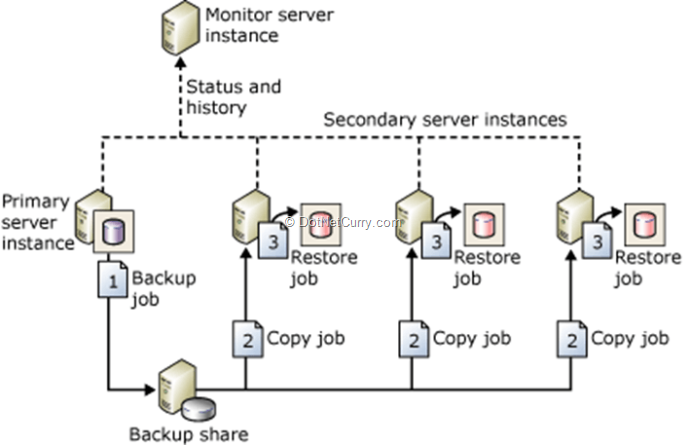

8. Create readonly copy of databases using SQL Server Log Shipping

SQL Server Log shipping is a very easy to use technology to maintain standby database server in order to survive a server failure. But you can use log shipping to create readonly copy of databases where you can divert readonly queries. This is a great way to scale out your database. You don’t have to rely on only one server to serve all database traffic. You can distribute traffic across multiple servers.

Figure 5: SQL Server Log Shipping to create readonly databases

Here you have a primary server where all the INSERT, UPDATE, DELETE happens. Then the database is copied to several other servers via log shipping, where a readonly copy of the database is maintained. You can configure log shipping to ship changes made in the primary database every minute or every 15 mins. All these other servers have the database in readonly mode and it can serve SELECT queries. If you have an ecommerce website, then you have the product catalog that changes infrequently. You can then divert all queries to the catalog tables to the log shipped servers and distribute the database load.

You should never produce MIS reports or take batch extracts from the primary database where the INSERT, UPDATE, DELETE are happening. You should always do these on log shipped standby databases.

9. Housekeeping databases

If you have transactional tables where records get inserted very frequently and unless you remove older records from the table, you run out of space within weeks, then it is a challenge to periodically purge such tables without bringing down the tables. Especially if you have a 24x7 website then it becomes very difficult to find a slot where you can take an outage and do a purge that takes 15-30 mins to finish. During purge operations, the table gets locked and all other queries on that table times out. So, you need to find a way to purge records consuming the least amount of database resource.

There are various ways to do this but we found the following approach having the least footprint on the database:

The idea here is to do a steady small batch of delete at a time until all the records we want to purge are deleted. This query can go on for hours not a problem. But during that time, there will be no significant stress on the database to cause other queries to timeout or degrade significantly.

First we set the isolation level. This is absolutely key. This isolation level tells SQL Server that the following query does not need locking. It can read dirty data, does not matter. So, there’s no reason for SQL Server to lock records in order to ensure the query gets properly committed data. Unless you are a bank, you should put this in all your stored procs and you will see sometimes 10 times better throughput from your database for read queries.

Next we read the row IDs from the source table and store in a table variable. This is the only time we are going to do a SELECT on the source table so that SQL Server does not have to scan the large table again and again.

Then we keep picking 1000 row IDs at a time and delete the rows from the table. After each delete, we give SQL Server 5 seconds to rest and flush the logs.

10. Tuning database indexes

Over the time, data in tables get fragmented as data gets inserted, updated and deleted. If you leave the tables alone, the indexes will get slower and slower and queries will get slower. If you have millions of records on a table and you start having significant fragmentation on the tables, over the time queries will get so slow that they will start timing out. In order to keep the tables fast and lean, you need to regularly rebuild the indexes. Here’s a SQL snippet that will run through all the indexes on the database and see if the indexes are heavily fragmented. If they are then it will issue an index rebuild on them.

http://www.mssqltips.com/sqlservertip/1165/managing-sql-server-database-fragmentation/

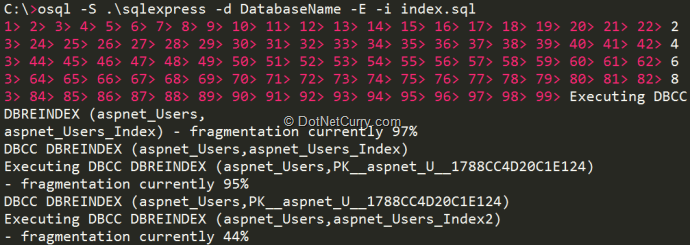

When you run the query, it looks like this:

Figure 6: Index rebuild

You should do this during weekends when your traffic is lowest. However, there’s a catch. SQL Server Standard Edition does not allow online index rebuild. This means during index rebuild the index will be offline. So, most of your queries will timeout. If you want to have the index online during the rebuild then you will need SQL Server Enterprise Edition, which is very expensive.

11. Monitoring SQL Server for performance issues

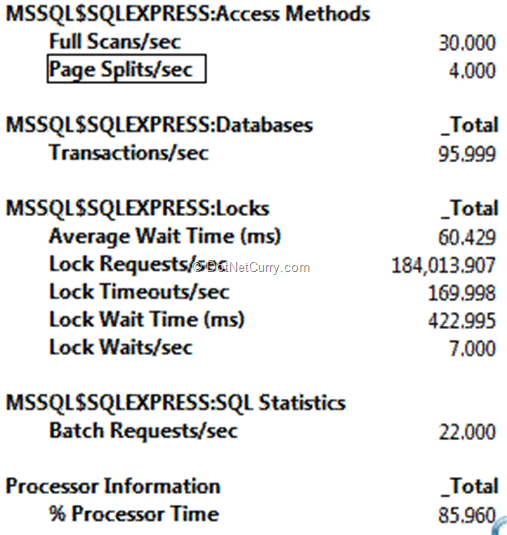

Windows Performance Monitor is a great way to monitor performance of your databases. Just go to Start -> Run, type “perfmon” and you will get the performance monitor. From there, click on the + sign and add the following counters:

Figure 7: Monitor SQL Server performance

These are some key counters that can give you indication about how well your database is performing and if there’s a performance problem, what is the bottleneck.

For example, here you see very high Full Scans/Sec. This means you have queries that are not using index and scanning the entire table. So, you need to investigate which queries are causing high IO and see their execution plan to find out where they are missing index. You can run SQL Server Standard Reports by right clicking on the server on SQL Server Management Studio and selecting Reports and then the Top 10 IO and Top 10 CPU consuming queries. They will show you the worst queries. That’s your starting point to tune.

The other most significant one is Lock Requests/Sec. It should ideally be zero. But if you have more than 100, then it is usually bad. It is an indication that you need to put SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED in your queries.

12. Sending mails from websites

We usually use the SmtpClient to send emails via some SMTP server. Usually you have a common email server for your company and your websites connect to that email server to send mails. But if you configure SmtpClient to connect to the mail server, then it is going to open a connection to the email server every time you call the Send() function. Establishing a SMTP session, uploading the email message, then the attachments is a very chatty process. There are many back and forth communication that happens between the webserver and email server. It makes your pages to slow down when you try to synchronously send email. If your smtp server goes down, your website throws error and the messages are lost.

Instead of configuring a smtp server settings in web.config, you should configure to use the local IIS SMTP Service. On each webserver, install the IIS SMTP Service and configure it to relay the messages to the company’s email server. This way your website will connect to the local server and queue the messages into the IIS SMTP service. Since this process happens within the server, it will be a lot faster than connecting to a distant email server. IIS SMTP service will gradually pick the messages and send them to the company’s email server.

An even better approach is to use the IIS SMTP service pickup folder. SmtpClient can just serialize the whole message in a pickup folder usually located at C:\INETPUB\MAILROOT\PICKUP. This way even if the IIT SMTP Service is down, it will still be able to write the message and complete the execution of the page. Then IIS SMTP service will pick up the messages from this folder and send them one by one.

All you have to do is have this in your web.config:

13. Regular server restart

Sometimes we have bad code on production that causes memory leaks, crashes the app pool randomly, allocates COM Objects but does not release them gracefully, opens TCP connections and does not close them properly. This causes things to go bad at operating system level and you end up with an unhealthy server. You start having weird problems and crashes. Sometimes IIS stops responding. Sometimes remote desktop does not work. At that point, you have no choice but to restart Windows.

Especially if you outsource development to cheapest possible resource, you will have no choice but to regularly restart servers.

We have seen such problems happen so many times that we nowadays we regularly restart windows servers at least once every quarter.

14. Diskcleanup

Windows downloads gigantic windows updates, creates gigabytes of crash dump when app pool crashes, fills up temporary folders with gigabytes of garbage and so on. If you have limited storage on your webservers, for example if you are on those cheap virtual servers that comes with 20 GB space, then you need to schedule regular diskcleanup.

Fortunately Windows comes with a pretty decent cleanup tool. First you run:

Put some number there. It will run Disk Cleanup tool in a record mode where it will let you select what you want to clean. Then when you click OK and exit, it will remember those settings against that number.

Figure 8: Diskcleanup in record mode

Then you schedule a task in Windows Task Scheduler to run the following command:

Here you put the same number that you have used to record the settings.

When you configure the task, ensure you have these settings turned on:

Figure 9: Task scheduler settings

This will give Cleanmgr the necessary privilege to delete files from protected folders.

Hope you enjoyed reading these suggestions. Let us know if you happen to use or are using any of these skills in your application.

Share your comments on this Article:(0)

Leave a Reply